VPCOMPRESSD - Packed COMPRESS Dword

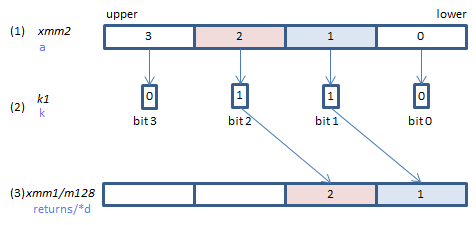

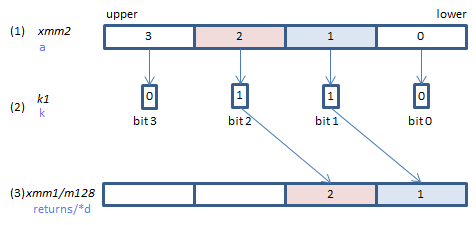

VPCOMPRESSD xmm1/m128{k1}{z}, xmm2 (V5+VL

__m128i _mm_mask_compress_epi32(__m128i s, __mmask8 k, __m128i a)

__m128i _mm_maskz_compress_epi32(__mmask8 k, __m128i a)

void _mm_mask_compressstoreu_epi32(void* d, __mmask8 k, __m128i a)

If each bit of (2) is set, the corresponding element of (1) is copied to (3), packed tightly from the lowest position.

If (3) is XMM register and {z} is specified (_maskz_ intrinsic used), upper elements of (3) are zero cleared.

If (3) is XMM register and {z} is not specified (_mask_ intrinsic used), upper elements of (3) are left unchanged (copied from s).

If (3) is memory (_compressstoreu_ intrinsic used) , upper elements of (3) are untouched.

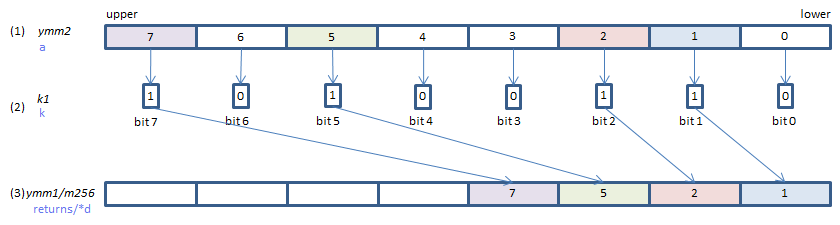

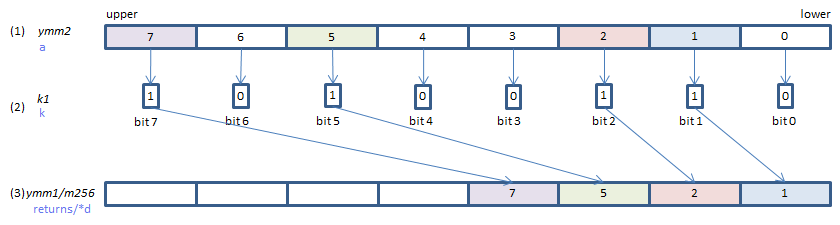

VPCOMPRESSD ymm1/m256{k1}{z}, ymm2 (V5+VL

__m256i _mm256_mask_compress_epi32(__m256i s, __mmask8 k, __m256i a)

__m256i _mm256_maskz_compress_epi32(__mmask8 k, __m256i a)

void _mm256_mask_compressstoreu_epi32(void* d, __mmask8 k, __m256i a)

If each bit of (2) is set, the corresponding element of (1) is copied to (3), packed tightly from the lowest position.

If (3) is YMM register and {z} is specified (_maskz_ intrinsic used), upper elements of (3) are zero cleared.

If (3) is YMM register and {z} is not specified (_mask_ intrinsic used), upper elements of (3) are left unchanged (copied from s).

If (3) is memory (_compressstoreu_ intrinsic used) , upper elements of (3) are untouched.

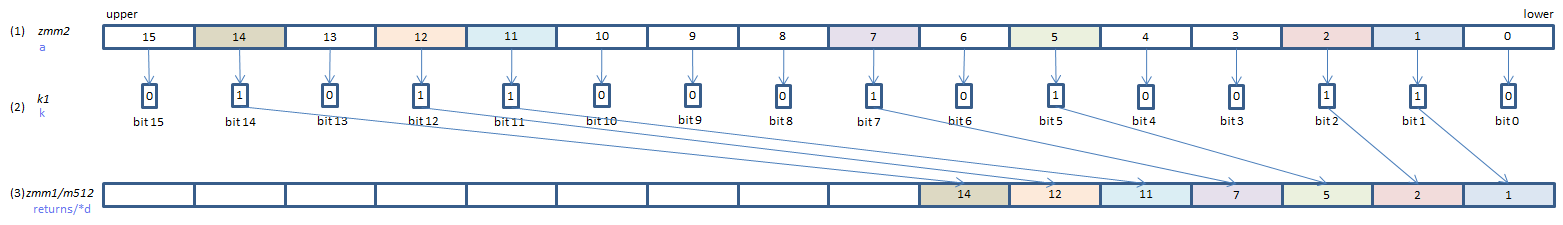

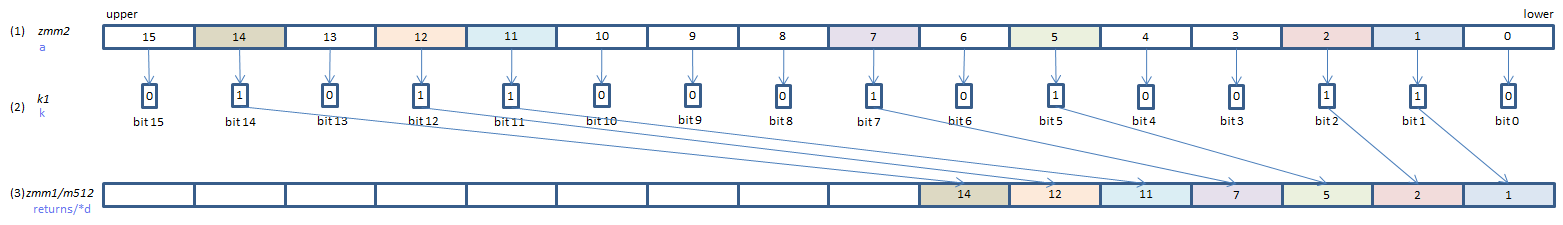

VPCOMPRESSD zmm1/m512{k1}{z}, zmm2 (V5

__m512i _mm512_mask_compress_epi32(__m512i s, __mmask16 k, __m512i a)

__m512i _mm512_maskz_compress_epi32(__mmask16 k, __m512i a)

void _mm512_mask_compressstoreu_epi32(void* d, __mmask16 k, __m512i a)

If each bit of (2) is set, the corresponding element of (1) is copied to (3), packed tightly from the lowest position.

If (3) is ZMM register and {z} is specified (_maskz_ intrinsic used), upper elements of (3) are zero cleared.

If (3) is ZMM register and {z} is not specified (_mask_ intrinsic used), upper elements of (3) are left unchanged (copied from s).

If (3) is memory (_compressstoreu_ intrinsic used) , upper elements of (3) are untouched.

x86/x64 SIMD Instruction List

Feedback